MySQL: TEXT vs. VARCHAR Performance

Starting with MySQL 5.0.3, the maximum field length for VARCHAR fields was increased from 255 characters to 65,535 characters. This is good news, as VARCHAR fields, as opposed to TEXT fields, are stored in-row for the MyISAM storage engine (InnoDB has different characteristics). TEXT and BLOB fields are not stored in-row — they require a separate lookup (and a potential disk read) if their column is included in a SELECT clause. Additionally, the inclusion of a TEXT or BLOB column in any sort will force the sort to use a disk-based temporary table, as the MEMORY (HEAP) storage engine, which is used for temporary tables, requires. Thus, the benefits of using a VARCHAR field instead of TEXT for columns between 255 and 65k characters seem obvious at first glance in some scenarios: potentially less disk reads (for queries including the column, as there is no out-of-row data) and less writes (for queries with sorts including the column).

Following a review of my MySQL server instance’s performance metrics (mysqltuner.sh and mysql tuning-primer.sh point out interesting things), I found that approximately 33% of the server’s temporary tables used on-disk temporary tables (as opposed in-memory tables). On-disk temporary tables can be created for several reasons, most notably if the resulting table will be larger than the minimum of MySQL’s tmp_table_size/max_heap_table_size variables OR when a TEXT/BLOB field is included in the sort.

Since any operation that uses the disk for sorting will be noticeably slower than using RAM, I started investigating why so many of my temporary tables went to disk. One of my website’s most heavily used MyISAM tables contained two TEXT columns, which were textual descriptions of an object, submitted by the website’s visitors. The table schema was created prior to MySQL v5.0.3, when VARCHAR fields were limited to 255 characters or less. For this object, I wanted to allow descriptions larger than 255 characters, so the table utilized the two TEXT fields to store this data.

So my first thought in reducing the number of on-disk temporary tables was to convert these TEXT fields to VARCHAR. I sampled the column’s values, and found that the two fields’ maximum input sizes were currently around 8KB. Not thinking too much about what size I wanted to support, I decided that I could set both fields to VARCHAR(30000) instead of TEXT. I changed the fields, verified through automated tests that everything still worked, and called it a night.

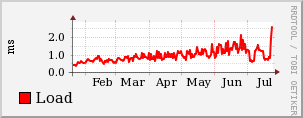

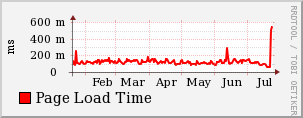

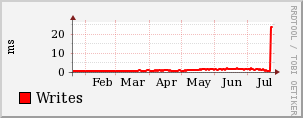

Over the next two days, I noticed that there were several alarming metrics trending the wrong way. I utilize Cacti to monitor several hundred server health metrics — it is great for showing trends and alerting about changes. Unfortunately, it was reporting that server load, page load time and disk activity were all up significantly — especially disk writes. Wondering if it was a fluke, I left the server alone for another day to see if it would subside, but the high load and disk writes continued consistently. The load was causing real user-perceived performance impacts for the website’s visitors, causing average page load time to increase from 70ms to 470ms.

Here’s what the Cacti graphs looked like:

Wouldn’t you be alarmed?

Not wanting to run an intensive performance review or diagnostics on the live website, I came up with a plan for how I would diagnose what the problem was:

- Enable temporary lightweight tracing on the server to try to determine the source of the increased disk activity (iotop or dstat).

- If MySQL is causing most of the disk activity, temporarily revert the VARCHAR(30000) columns back to TEXT, as I suspected they were somehow the cause of the slowdown.

- Perform a deeper analysis of the problem on a separate machine.

Running iotop on the server confirmed that a majority of the disk writes were coming from MySQL. Additionally, after I reverted the columns to TEXT, the server load and page load times went back to normal.

So why did my seemingly obvious “performance win” end up as a performance dud? Shame on me for not testing and verifying the changes before I pushed them live!

I didn’t want to do any more diagnosis of the problem on the live Linux server — there’s no point in punishing my visitors. I have a separate Linux development server that I could have used to dig a little deeper, but I’m more comfortable doing performance analysis on Windows, and luckily, MySQL has Windows ports.

For almost all of my performance analysis work, I use the excellent Windows Performance Tools (WPT) Kit (using ETW and xperf). If you haven’t used the WPT and xperf tools before, there are some good guides on trace capturing using ETW and visual analysis via xperfview on MSDN. ETW is a lightweight run-time tracing environment for Windows. ETW can trace both kernel (CPU, disk, network and other activity) and user mode (application) events, and saves them to a .ETL file that can later be processed. The Windows Performance team and many other teams in Windows regularly use ETW/xperf for a majority of their performance analysis.

To figure out what’s going on with our VARCHAR columns, I first needed to ensure that I could replicate the problem on my Windows MySQL machine. I installed MySQL 5 and loaded a snapshot of my live database into the instance.

I then looked at the MySQL Slow Query Log from the real server to see what queries were taking a long time (>1 second). There were thousands of instances of a query that looked something like this:

SELECT t2.* FROM table1 t1, table2 t2 WHERE t2.t1id = t1.id ORDER BY t1.id LIMIT 0, 10

Which looks innocent enough, but Table2 is the table that I changed the two TEXT fields to VARCHAR, and I’m querying all of the columns (SELECT *) from it. Before, because of the TEXT column, this query would’ve used an on-disk temporary table for the results (because the MySQL manual tells us this is the case for results that need temporary tables and have TEXT columns). So why is this query appearing to be so much slower now?

First of all, I checked how this query responded on my Windows MySQL instance:

mysql> SELECT t2.* FROM table1 t1, table2 t2 WHERE t2.t1id = t1.id ORDER BY t1.id LIMIT 0, 10; ... 10 rows in set (1.71 sec)

This confirmed the issue appeared on my development machine as well! This query should be nearly instantaneous (less than 50ms), and 1,710 milliseconds is a long time to wait for a single query of many in a page load.

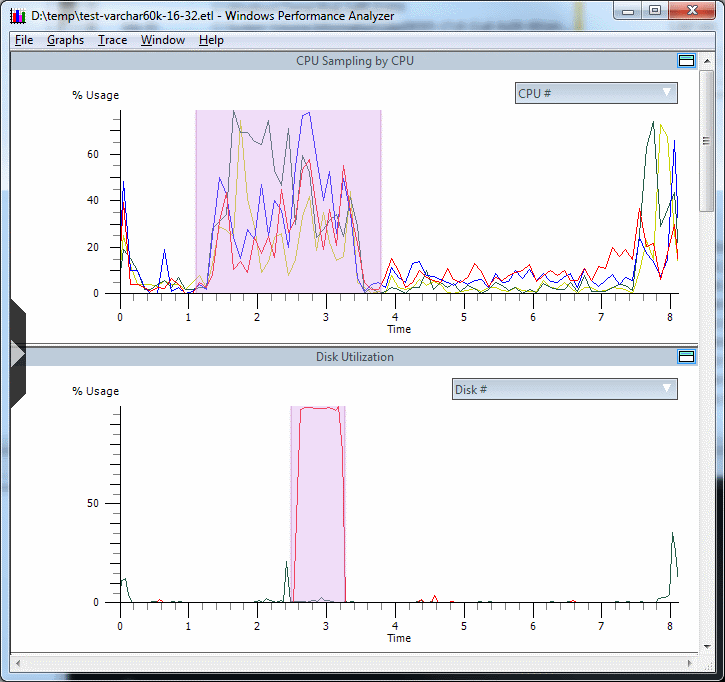

My guess at this point was it had something to do with temporary tables. And disks. Since that was what I was trying to improve with my TEXT to VARCHAR change, it only makes sense that I somehow made it worse. So to validate this theory, I enabled a bit of lightweight ETW tracing to see how the OS handled the query.

I started ETW tracing to get basic CPU and disk IO information:

xperf -on base+fileio

Then I re-ran the above query in the MySQL command line, and saved the trace to disk (trace.etl) after the query finished:

xperf -d trace.etl

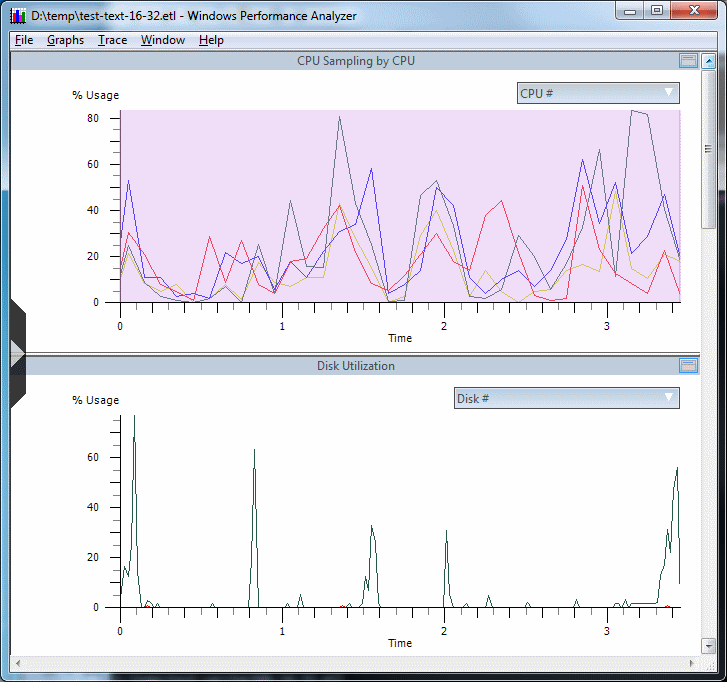

Loading the trace up in xperfview showed some interesting things:

xperfview trace.etl

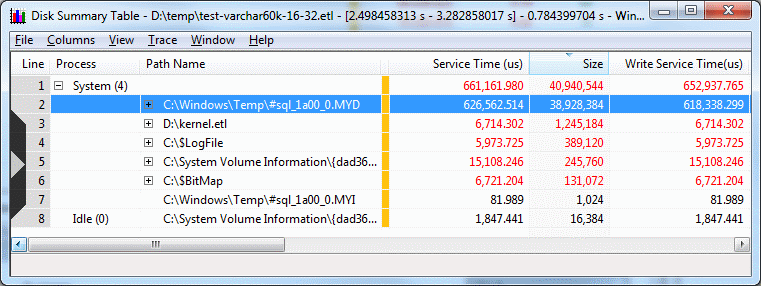

From a CPU usage perspective, the CPU (on my quad-core system) seemed to be pretty active, but not 100% utilized. Looking at the CPU Sampling Summary Table, I found that mysqld-nt.exe was using 1,830ms / 17% of my CPU (essentially 68% of one of the cores). Not bad, but not maxed out either. But what’s interesting here was the disk utilization graph. For a period of ~700ms, we’re 100% utilized. Highlighting that region and viewing the summary table showed where we spent our time:

#sql_1a00_0.MYD is a temporary table from MySQL (which can be confirmed from the File IO graph). In this case, our single query caused 38MB of disk writes and ~626ms to write/read it back in.

Huh?

At this point, I wanted to double-check that the TEXT to VARCHAR change caused this. I updated the column to TEXT, and re-run the same query:

mysql> SELECT t2.* FROM table1 t1, table2 t2 WHERE t2.t1id = t1.id ORDER BY t1.id LIMIT 0, 10; ... 10 rows in set (0.03 sec)

Well, 0.03 seconds is a lot faster than 1.71 seconds. This is promising. I took another ETW trace of the query on with the TEXT field:

After switching back to TEXT fields, mysql used ~30ms of CPU and caused no disk activity.

Now that I knew what was causing the slowdown, I wanted to try to fully understand why this was the case. Remember, I started down this path originally because I found that I had a high portion of temporary tables were on-disk temporary tables. In the interest of seeing less disk activity on my server, I attempted to change several TEXT columns (which can cause on-disk temporary tables) to VARCHAR(30000) columns. However, I didn’t fully look into what was causing the on-disk temporary tables, and instead just guessed. As a result, my server’s perf tanked!

Now’s a good time to review the first paragraph of this post. There are several reasons MySQL may use an internal temporary table. One interesting quote:

Such a [temporary] table can be held in memory and processed by the

MEMORYstorage engine, or stored on disk and processed by theMyISAMstorage engine.

and

If an internal temporary table is created initially as an in-memory table but becomes too large, MySQL automatically converts it to an on-disk table. The maximum size for in-memory temporary tables is the minimum of the

tmp_table_sizeandmax_heap_table_sizevalues. This differs fromMEMORYtables explicitly created withCREATE TABLE: such tables, themax_heap_table_sizesystem variable determines how large the table is permitted to grow and there is no conversion to on-disk format.

So a temporary table can start out as a MEMORY table, then if MySQL realizes it’s too big for tmp_table_size and max_heap_table_size, it may convert it to a MyISAM table on the disk. One caveat with the MEMORY engine is:

MEMORYtables use a fixed-length row-storage format. Variable-length types such asVARCHARare stored using a fixed length.

How did this behavior affect me, and cause the 30ms query to take 1,710 ms? Well let’s start out with the two newly VARCHAR(30000) columns. In a normal MyISAM table, with a dynamically sized row, these two columns only take as much space as the data they contain (plus 1 byte). That is, if I had a row and these two columns only had 10 bytes of data in them, the row size would be (10+1)*2+[other columns]. However, if I happened to convert this MyISAM table to use fixed-length rows, or I was using the MEMORY storage engine, the row size would be 30000*2+[other columns]. Currently, according to my dataset, these dynamically sized rows only required an average of 1,648 bytes per row.

And that’s the crux of the problem. My query above, simple enough, requires a temporary table to do its work. We can verify this via the MySQL EXPLAIN command:

mysql> EXPLAIN SELECT t2.* FROM table1 t1, table2 t2 WHERE t2.t1id = t1.id ORDER BY t1.id LIMIT 0, 25; +----+-------------+-------+--------+---------------+---------+---------+------ | id | select_type | table | key | rows | Extra | +----+-------------+-------+--------+---------------+---------+---------+------ | 1 | SIMPLE | t2 | NULL | 8813 | Using temporary; Using filesort | | 1 | SIMPLE | t1 | PRIMARY | 1 | Using index | +----+-------------+-------+--------+---------------+---------+---------+------

(I trimmed a couple columns to fit to the page’s width).

Here, we see our t2 table Using temporary. MySQL converted the 8,813 dynamic-row columns to fixed-length, which expanded the VARCHARS to their full size: approximately 60,600 byes per-row. That’s 8,813 rows * 60,600 bytes = 534,067,800 bytes to deal with! The server’s tmp_table_size variables decided this wasn’t good for an in-memory temporary table, so MySQL ended up moving a lot of this work to disk. As a result, we had ~700ms of disk writes with this query when using VARCHAR(30000) columns.

There are a couple ways to avoid this behavior in MyISAM tables:

- Use TEXT fields, with their known caveats.

- Use a smaller, more reasonable VARCHAR size. These fields probably don’t need to hold more than 10k of data. One could reduce their size to 10k or smaller, or even move them to another table and only retrieve them when necessary.

- Fiddle with the tmp_table_size and max_heap_table_size variables. These two variables dictate which queries use on-disk temporary tables, as described here. They are set at approximately 35mb/16mb by default (on my Windows MySQL 5.1 instance).

I made a couple changes. I changed one of the original 30k fields to 10k, and and changed the other one to 1k. This reduced the potential row size in MEMORY temporary tables tremendously. I also upped the tmp_table_size and max_heap_table_size variables to 128MB on my server. The combination of these two changes ensured that the specific query above was no longer causing all of the performance issues (for now). I should probably move the 10k field to another table (or back to TEXT) to be sure.

I probably didn’t need to use ETW and xperf here to look into things. Since I was aware that the changes I made to the database had a high correlation with the slow-downs I was seeing, and reverting these changes fixed the issue, I could have probably figured out what was going on by reading the MySQL manual a bit more. But I like to dig into things, and I think xperf can help visually communicate what’s going on with your system for problems like this. Seeing how much blocking IO a single query can cause really sheds light on things!

Admittedly, the server MySQL is running on also hosts a web server and multiple sites. A dedicated SQL server with fast, dedicated disks would help minimize problems like this.

One interesting note is that MySQL version 5.1 on Windows doesn’t have the same IO patterns as version 5.0 did – I see the same File IO for temporary tables with the VARCHAR fields, but not the same amount of disk activity. This could mean that 5.1 memory maps or caches a lot of the on-disk temporary file and doesn’t end up actually writing it out to disk. I am unsure why.

And again, InnoDB has different performance characteristics. I did not do any testing on InnoDB.

So at the end of the day, my server is back to normal, and I’ve learned a little bit more about how MySQL works. I hope you did too.

This is a very good article! Thanks for taking the time to share.

Thanks for the article. And I like your way of digging things. Sorry for all the work “to put the server back to normal”.

Really nice article.

Well, this one solves my long time quesiton about vchar and text.

Thanks for sharing!

Nice read, but this a good example how not to do optimization. To change stuff based on assumption, without performance profiling, is the wrong way of doing things. Your own testing afterwards shows that the query only took .03 second and I would guess that there is several other areas in your code that is much slower than that.

Hi Nic,

Thanks for your post! Just pondering this issue (without actually testing it) there might be another solution: Have you considered performing a smaller join operation (i.e. on t1.id and t2.id only) in a subquery first and left joining the changed VARCHAR column later?

Kind regards,

Melle

Very informative post about some of the MySQL varchar nuances I was not aware of. I usually limit them to around 8k then switch to text, but for no good reason that I can remember. This in depth explanation will definitely come in useful.

Great article. Did you also try creating these fields as indexes, I would be very interested to see if by making them both indexes if this has the same result…..

Thanks for great article, I didn’t realize the performance difference is so big. Greets, Avoin Tyopaikka

@Shetil

Agreed, and Shame On Me for not testing any of this first! I made the original change blindly with preconceived assumptions about how my change would behave. Had I tested this first, I would have noticed the problem right away.

As for trying to improve the original 30ms query, the query was actually a significant part of the average page load time. The page that it was on took an average in 70ms before my change, so the query was contributing 42% of that time.

@Melle

That might work be a good solution to this type of issue as well — great idea.

you’re Testing maniac =)

Hi,

I am working on one project. We were asking users to share their stories. The file in DB against that story is varchar (512) , but the users posted stories greater than varchar (512), now client wants us to retrieve full story.

Is there any way in mysql where i can retrieve the full stories OR simple this is not possible ?

Really good article. Thank you for taking the time to explain us.

Great article and very useful for me. Thanks for sharing.

Thank you nic, it let me know more about how mysql works.

I think store text in another table is always a good idea.

Great article really helped me . thx

Perfect! Helped a lot!

Thanks for this article. I was just about to try converting my own text columns to varchar, but now I think I won’t do that.

loved this very informative well written article ! what about using an in-memory filesystem for mysql tmp dir ? so even ‘on disk’ temporary table would fit in ram.

Yes, that’s a good option as well. Be sure to read the MySQL manual for times that you shouldn’t use

tmpfs(eg, replication slaves).http://dev.mysql.com/doc/refman/5.0/en/temporary-files.html

Thanks for this! It’s an interesting journey and well documented. I’d never really thought about the impact of a temporary table having a different storage engine to the “source” tables.

So the key takeout here is VARCHARs are represented as fixed-size in memory tables (i.e. effectively as CHARS and losing their advantage of shrinking to the data), whereas TEXT columns cause temp tables to skip memory entirely and go to disk. Both can run slow… depending on the shape of your data.

This is why most of the S.O. posts and mySQL say benchmark for your hardware and data I guess!

And another reason to avoid unnecessary select *’s

Sorry for my English. Thank you for your post. I’ve encountered same problem and found this post looking for a solution. I have 2 tables with about 400000 and 200000 rows which are joined by FK and PK. Minimizing the slow request I noticed that there are 3 conditions which altogether make the request slow: 1) join or 2 tables in FROM and their connection in WHERE; 2) order by (even simple order by id (PK); 3) two fields in SELECT are varchar(2000).

I found the following explanation from MySQL developers: http://dev.mysql.com/doc/refman/5.0/en/order-by-optimization.html and it seems the problem is in their “modified filesort algorithm”. For me it looks like a MySQL bug. Simple question: why at all MySQL operates with varchar fields of all row ignoring the fact that we need only a few rows in result? In your example you limited the result. I did the same limiting to 1 record. What stops MySQL from generation only some row ids on the first phase and asking later full number of fields specified in SELECT? They did it before optimization. So I think they have to optimize algorithm better.

And at the end the simple solution.

I changed my SQL from

SELECT

r.query, r.headers

FROM

log_request r , log_request_estimate re

WHERE

r.id = re.request_id

ORDER BY

r.id

LIMIT 1;

wrapping it with another SELECT:

SELECT body, headers FROM log_request

WHERE id IN (

SELECT r.id

FROM

log_request r , log_request_estimate re

WHERE

r.id = re.request_id and r.id<1000000

)

ORDER BY id

LIMIT 1;

I don't know exactly why does it work and I haven't yet tried it with my original request which is much more complex.

Explain command gives me the same result as for the slow request:

(id, select_type, table, type, possible_keys, key, key_len, ref, rows, Extra) VALUES

('1', 'SIMPLE', 'this_', 'index', 'FKB3DF0473B2FF1006', 'FKB3DF0473B2FF1006', '9', NULL, '76512', 'Using index; Using temporary; Using filesort'),

('1', 'SIMPLE', 'request1_', 'eq_ref', 'PRIMARY', 'PRIMARY', '8', 'petapi.this_.request_id', '1', '')

Sorry, I was wrong saying that explain plans are the same for the second (quick) reuqest there is another explain plan:

(id, select_type, table, type, possible_keys, key, key_len, ref, rows, Extra) VALUES

(1, ‘PRIMARY’, ‘log_request’, ‘index’, NULL, ‘PRIMARY’, ‘8’, NULL, 1, ‘Using where’),

(2, ‘DEPENDENT SUBQUERY’, ‘re’, ‘ref’, ‘FKB3DF0473B2FF1006’, ‘FKB3DF0473B2FF1006’, ‘9’, ‘func’, 1, ‘Using where; Using index’),

(2, ‘DEPENDENT SUBQUERY’, ‘r’, ‘eq_ref’, ‘PRIMARY’, ‘PRIMARY’, ‘8’, ‘func’, 1, ‘Using where; Using index’)

@ Andrey

Makes sense! Your first query will join the two tables, apply the WHERE, then ORDER, then LIMIT to the first row. This join might be too big for the temp tables, so it persists to disk. The second query does the sub-query first (without a JOIN) then does a simple ID match.

Many years later this post helped me a ton. I noticed the same drop in performance on a batch write of 8000 rows, basically transferring raw data from Redis into MySQL with some massaging in between (scraper data –> sql).

100 columns long, 1/3 decimal, 1/3 text, 1/3 string. I originally stored the string as text, worked well, started using varchar(511) at some point, performance for the batch write dropped immensely. I’m no longer diving into my swap space and in fact my CPU utilization dropped very significantly too.

This post confirmed to me what I suspected was happening but don’t have the debugging chops to prove — I also couldn’t find anything on SO directly (though ultimately a comment from SO led me here).

Thanks!

Thanks for the useful research. This is still valid in 2017 with MySQL 5.7 as the MEMORY engine still uses a fixed length row format and the defaults for tmp_table_size and max_heap_table_size haven’t changed (16MB).

Is this behavior same with VARCHAR or TEXT declared inside triggers?

Thanks for great article